Where are the analog computer startups?

We used to live in a world with only analog computers. Purpose built machines that could take an input and give the output. Take the Antikythera mechanism an ancient analog timekeeper on steroids. While trivial for us today this was a huge military advantage to coordinate ship movements across time and space. Military requirements for computing ballistic trajectories were all analog and capable of computing “firing solutions.” Someone literally has to do the math and come up with a solution.

The most famous analog computer is talked about so rarely but we’ve all been exposed to the results of it, the Electronium. It’s an analog computer that could actively feedback on itself and build up not just a solution but a tapestry. Where the hallucination is the feature. You can even make your own Electronium with ChatGPT and Sonic Pi!

My favorite way to work with ChatGPT is to lean into those hallucinations. While there are far too many AI published books spewing across amazon there’s something incredible about watching an AI slowly build up a cohesive story. I’ve tried in fits and spurts to help articulate why I wish analog computing would make a real serious comeback.

We already know our current designs are rocketing towards an energy crisis and that Moore’s law is reaching a fabrication limit. We need a Sophon moment, a way to build computers in more than one dimension without boiling circuits in the process. Thankfully, the technology already exists, HP just biffed it.

1970s, HP, memristors and mazes

I’m going to skip the complications of describing electronic theory and the overall history of memristors. The TLDR of where we are is roughly: Transistors were invented in 1947 ushering in digital signals. Memristors were invented only theoretically in the 70s by Leon Chua. HP built a physical device in the mid 2000s and has struggled to commercialize the technology to this day. HP is no longer the shining beacon of tech that it once was. If I had to guess, someone at HP struggled to explain what memristors could do and decided it was more important to scale the technology via a known consumer product (RAM/ReRAM) than try and build a whole new market.

Why should any of us care about an HP flub? Because we’re already 50 years behind on analog computing that can fundamentally change how we approach problems that memristors can solve with grace. A memristor has four very special properties:

- Its resistance changes depending on how much current flows through it over time

- The direction of the current flow matters. Left to right increases resistance, right to left decreases resistance

- A very small amount of current can tell you what the device’s resistance is without changing it.

- The set resistance is not lost when the memristor loses power

The most boring application for a memristor circuit would be a clock that you can understand how long it had been without power. Say you had a memristor that could go from a resistance of 1 Ohm to 86400 Ohms (the number of seconds in a day).

Every second that passed would increase the value of the memristor. 8:44 AM would be the same as 31440 seconds (the number of seconds since midnight). Say the power went out at 12:33PM and came back at 1:42PM. You could immediately tell that the clock was off for a little over an hour because it would have come back and show 12:33PM instead of flashing 1200. In general this seems very boring but a staggering amount of software development is dedicated to keeping track of how much things have changed with high accuracy.

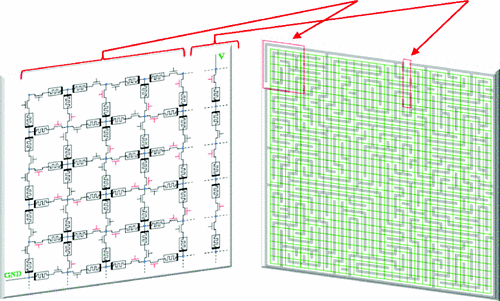

Things get interesting when you build networks of memristors. They have been used to build massively parallel solutions to mazes, finding the lowest energy path through a network. What happens when you treat these networks as multi-dimensional? What happens when you have mazes within mazes and arbitrary start and end points? You start to build something that resembles how the human brain works and stores information.

The way data-science and mathematics describes collections of data where each item in the collection goes off in many directions with many different values is called a tensor.

Tensors and surface manifolds

A tensor can be used as a way to describe a complicated path through space. A very approachable video to understand both tensors and how to write code against it is by the incredible José Valim:

If you’re curious about playing with elixir and tensors you can quickly bootstrap your experience using livebook

Your head, and the hairs on it, are a perfect example of a tensor that is multi-dimensional. First, the shape of your head is the surface manifold that contains point sources. Each point vector is simply a single hair follicle. The distribution of the follicles tells you how sparse (aka bald) your vector field is. The individual strands of hair are themselves a chain of tensors because your hair can have kinks and curls be strong or brittle across its whole length. Lets break it down a little bit:

- 2-dimensions - the surface of your head as a complex curved and maybe even rippled space

- point source - pretend your head is broken up into zones only a little bigger than a follicle, each zone contains either zero (no hair) or a hair vector. There’s also the possibility of a compound follicle which is a fun wrinkle.

- n-dimension hair tensors - each strand of hair has its own uniq set of properties to describe what it is. You have different values across the length of a single strand for every attribute, color, thickness, pliability, curl etc.

Bare with me for a second and lets double down on this analogy. A single strand of hair is a tensor with memory/history. Say you colored your hair, as it grew out multiple attributes, including the color, are different as time passes and your roots grow in. The length of hair that was colored still maintains the applied pigment but the root is the original hair color. This slowly grows out and re-dominates the length of hair. If you pluck that single strand of hair it has a knowable history by external observation (much like a memristor).

Now that you have a more intuitive sense of what a tensor can represent lets take ourselves back to the world of memristors. Imagine a multi-dimensional network of memristors that represent a foundational model. The model is defined by the structure and how each piece is connected, like a single color lego set. Each memristor though has flexibility to work across a range of values compared to our current models that just have a simple fixed value weight. It would be like a lego set that changes color, piece by piece, as it is built making for an individually uniq set but still conforming to the original design.

So please, someone, start working on scaling memristors for true analog compute. Help build frameworks to translate a trained model to an FPGA of memristors. Help claw back the future that HP managed to mess up.